Hey there, drone fans! Buckle up, because we’re diving into something I’m absolutely obsessed with: using drones to count and detect animals in the wild. This isn’t just cool tech—it’s a game-changer for farmers, ranchers, and conservationists.

Imagine soaring over vast grasslands, spotting every cow, sheep, or horse with pinpoint accuracy, all from a drone buzzing high above. A recent scientific paper, “An Efficient Algorithm for Small Livestock Object Detection in Unmanned Aerial Vehicle Imagery,” drops some mind-blowing advancements that make this possible. Let’s break it down in simple terms, so you can see why this is such a big deal for folks like us who love drones and the great outdoors.

Why Counting Animals from the Sky Matters

Picture this: you’re a rancher with hundreds of acres and thousands of animals. Counting every single cow or sheep by foot? That’s a nightmare—tiring, slow, and easy to mess up. Traditional methods like ground surveys are like trying to find your keys in a haystack. They take forever, cost a ton, and sometimes you miss animals hiding in the brush. Satellites? They’re great for big animals like zebras, but they can’t spot smaller critters like sheep clearly. That’s where drones swoop in like superheroes. They’re fast, flexible, and can snap high-res photos from angles that don’t spook the animals. Plus, they cover huge areas without breaking a sweat.

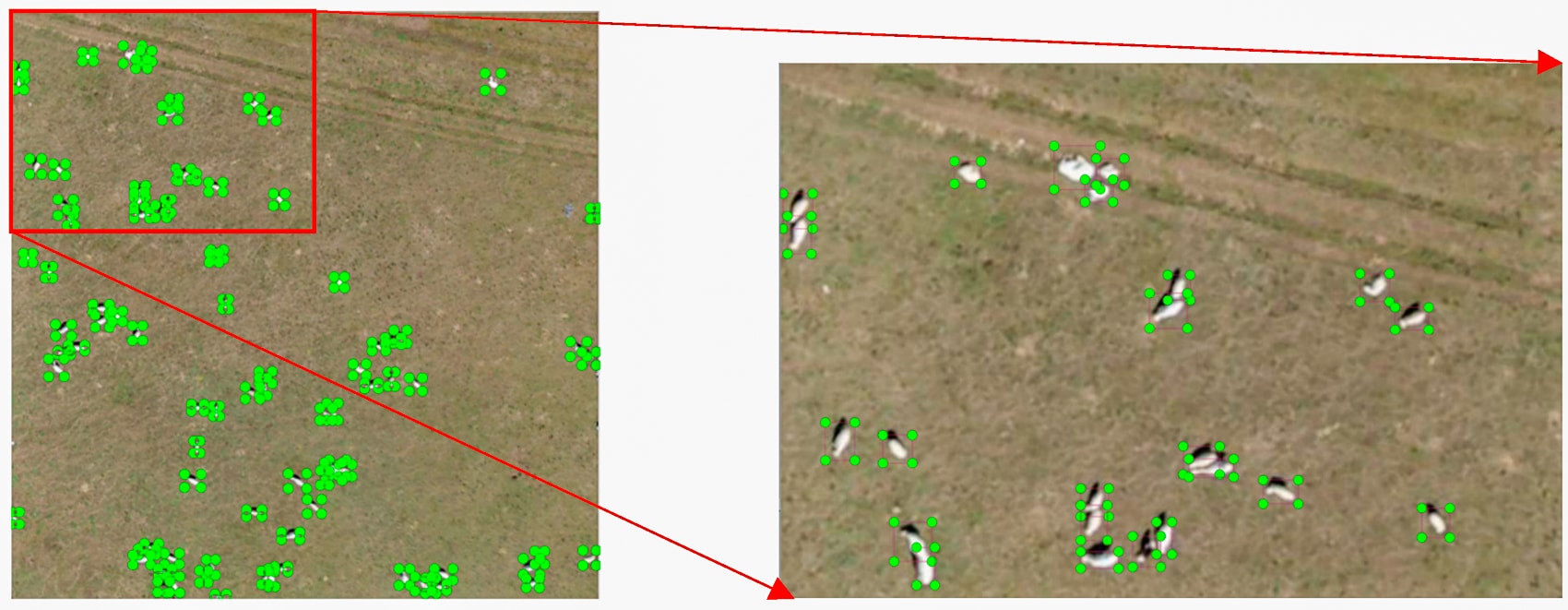

But here’s the catch: animals in drone images often look tiny, like specks in a sea of grass, and they’re packed close together. Spotting them accurately used to be a headache—until now. This new study introduces a slick algorithm called LSNET, built on the YOLOv7 framework (don’t worry, we’ll keep the tech talk simple). It’s like giving your drone x-ray vision to pick out every animal, no matter how small or crowded.

The LSNET Algorithm: Your Drone’s New Best Friend

So, what’s the deal with LSNET? Think of it as a super-smart assistant for your drone’s camera. The researchers tweaked an existing system called YOLOv7, which is already great at spotting objects in images. But livestock in drone shots are tricky—small, bunched up, and sometimes blending into the background. LSNET fixes that with three big upgrades:

- P2 Prediction Head: This is like zooming in on the fine details. LSNET adds a new “eye” (called P2) that focuses on shallow, high-res images to catch tiny animals. It also ditches a deeper layer (P5) that was overcomplicating things, making the system lighter and faster.

- Large Kernel Attentions Spatial Pyramid Pooling (LKASPP): Sounds fancy, right? It’s just a way to help the drone “see” both the big picture and small details at once. This module helps the drone understand the scene better, like knowing a sheep from a rock, even in a cluttered field.

- WIoU v3 Loss Function: This is the brain behind the operation. It helps the drone focus on the right animals and ignore distractions, like shadows or bushes. It’s like teaching your drone to stay sharp and not get fooled by background noise.

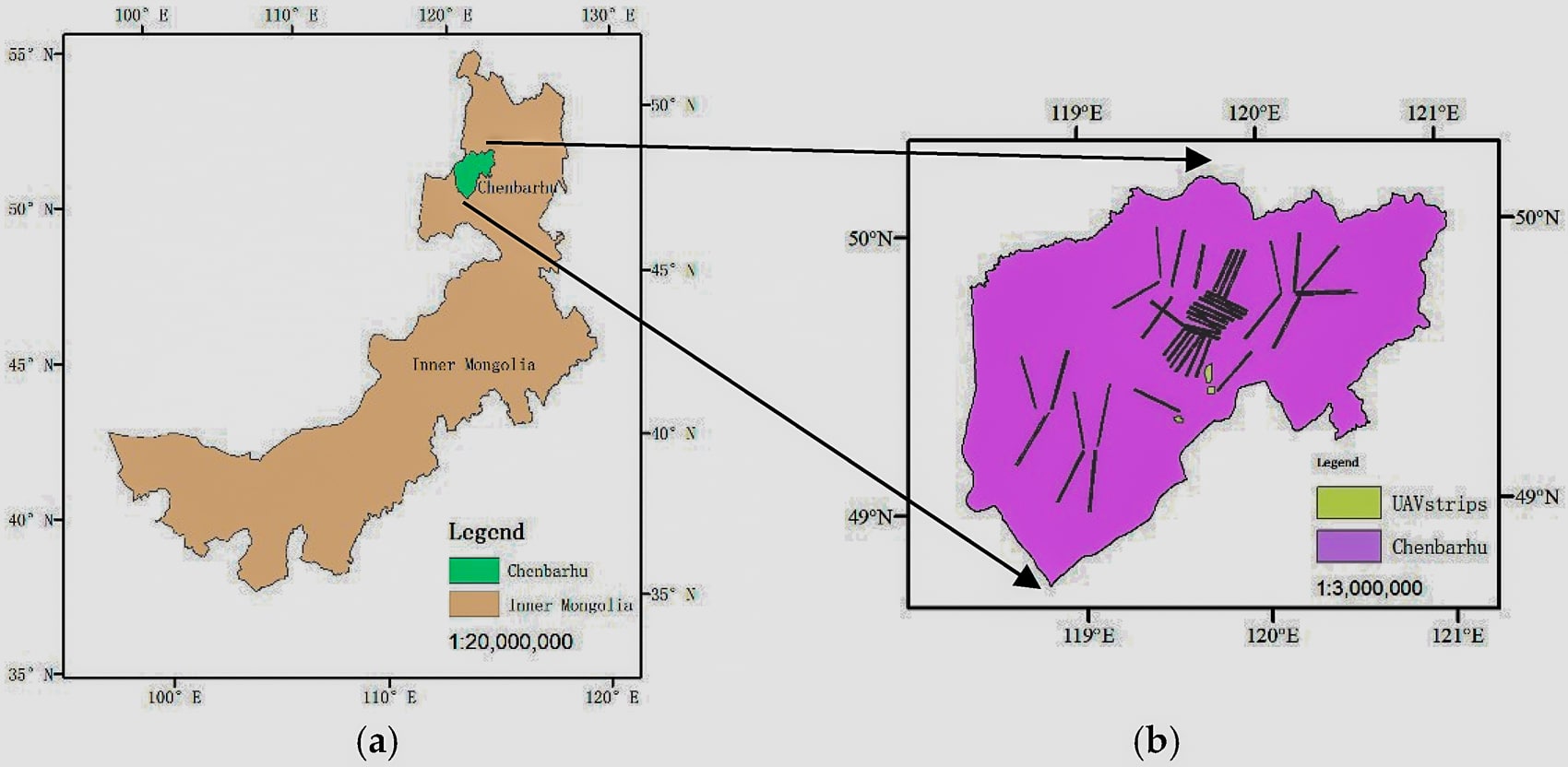

These tweaks make LSNET a beast at spotting cattle, sheep, and horses, even when they’re just dots in a massive drone image. The study tested it on a huge dataset from Hulunbuir, Inner Mongolia—45,254 images covering 203 square miles (526 km2)! That’s a lot of ground, and LSNET nailed it, boosting accuracy by 1.47% over YOLOv7, hitting a mean Average Precision (mAP) of 93.33%. In plain English, it’s really good at finding animals without missing or misidentifying them.

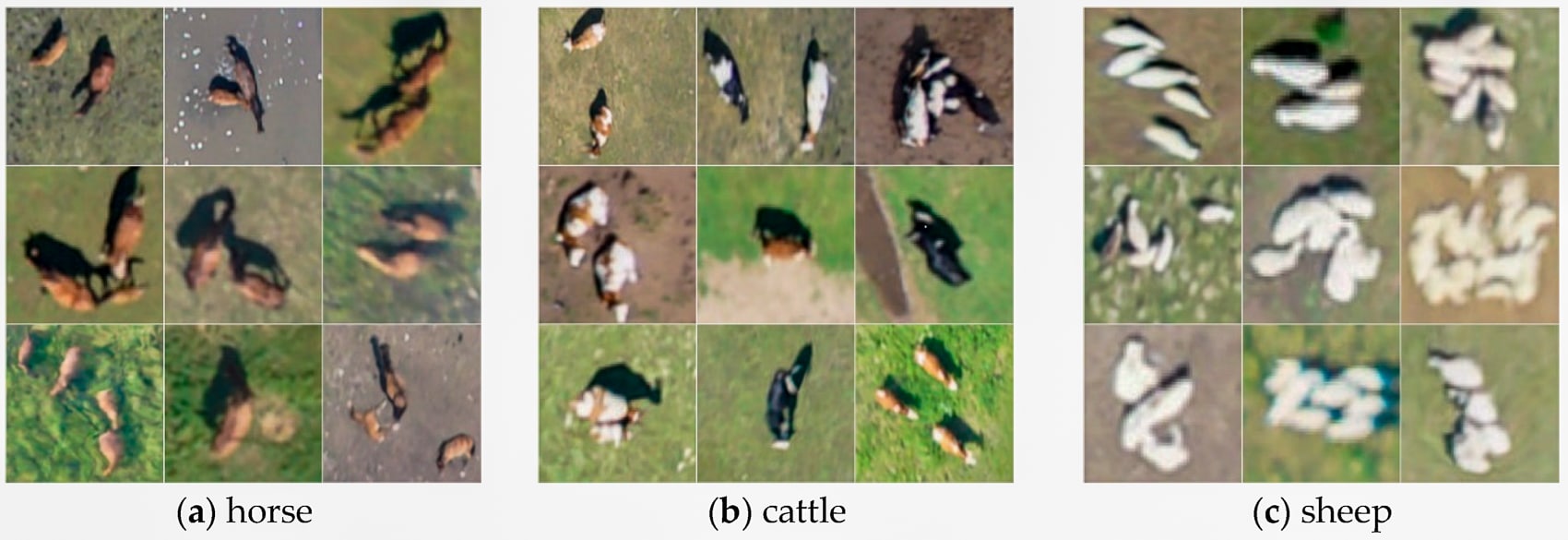

How They Did It: Drones Over the Grasslands

The researchers flew drones over the Prairie Chenbarhu Banner in Hulunbuir, a prime livestock region in China, from July 13 to 26, 2023. They used 45 flights at 984 ft up (300 meters), snapping RGB photos with resolutions as sharp as 4-7 cm. That’s clear enough to see a sheep’s ears! They captured cattle, sheep, and horses across 4396 image patches, each carefully labeled to train the LSNET algorithm. Most animals were tiny in these images—some as small as 10-20 pixels—but LSNET handled it like a champ, spotting even the smallest sheep in dense herds.

Compared to older methods like Faster R-CNN (which only hit 19.61% mAP) or even YOLOv5 (89.89% mAP), LSNET’s 93.33% mAP is a huge leap. It’s also lighter, using fewer parameters than other models, so it’s less of a hog on your computer’s power. This means you could potentially run it on a drone or a server without needing a supercomputer.

The Bigger Picture: Drones and Animal Conservation

This isn’t just about counting cows for ranchers—it’s a massive win for conservation too. Drones with LSNET can monitor Wildlife without disturbing them, which is huge for protecting endangered species or tracking population changes. Historically, animal counting relied on slow, error-prone methods like ground surveys or low-res satellite images. Advances like LSNET are flipping the script, making drones the go-to tool for accurate, non-invasive monitoring.

Think about it: drones can cover vast areas like Hulunbuir’s grasslands or African savannas, spotting everything from antelopes to zebras. They’re quiet, don’t scare animals, and can fly over rough terrain where humans or vehicles can’t go. Plus, with algorithms like LSNET, you’re not just counting—you’re getting precise data on animal types, locations, and even behaviors, all without stepping foot in their habitat.

What’s Next for Drone Animal Detection?

The study hints at some exciting next steps. Fixed-wing drones could cover even larger areas than the rotor drones used here, making surveys faster and cheaper. The researchers also want to make LSNET leaner, so it can run directly on drones instead of a beefy server. Imagine a drone that processes images in real-time, giving you instant animal counts while it’s still in the air! They’re also looking to test LSNET in tougher conditions, like fog or rain, and expand it to detect other animals, not just livestock. This could mean tracking wolves, deer, or even rare birds, opening up a world of possibilities for wildlife management.

There’s room to grow, though. Ultra-dense herds still trip up the system a bit, and it’s not fully optimized for every weather condition. But with tricks like model pruning (trimming the fat off the algorithm) or transfer learning (teaching it to adapt to new environments), LSNET could become even more versatile. Picture a future where your drone app pings your phone with real-time animal counts, no matter where you are!

Why This Gets Me Pumped for #dronesforgood

I can’t help but geek out over this. As a drone pilot, I’ve seen firsthand how these flying gadgets can do more than just snap cool videos—they’re tools for good. This LSNET study is a perfect example of why the #dronesforgood movement is so exciting. It’s not just about tech for tech’s sake; it’s about using drones to solve real problems, like helping ranchers manage their herds sustainably or letting conservationists protect wildlife without disturbing their homes. Papers like this push the boundaries, showing us how drones can make a difference in ways we never imagined.

The fact that scientists are pouring their brains into making drones smarter for tasks like animal counting gives me hope for the future. It’s proof that #dronesforG}good isn’t just a hashtag—it’s a movement that’s changing how we care for our planet. Whether you’re a farmer keeping tabs on your sheep or a conservationist tracking endangered species, this tech is your wingman. So, here’s to more breakthroughs like LSNET, more drones in the sky, and more ways to make the world a better place—one flight at a time!

Images courtesy of Key Laboratory of Land Surface Pattern and Simulation, Institute of Sciences and Natural Resources Research and the Chinese Academy of Sciences

Discover more from DroneXL.co

Subscribe to get the latest posts sent to your email.

+ There are no comments

Add yours